After working on uniformly weighted Bidirectional PT for a while, the render path seems to be in a pretty good state. The BDPT renders almost matches the reference renders exactly. However, it's still off in places.

While the direct lighting and the first bounce matches exactly, from bounce 2 onward the indirect lighting in the BDPT seems weaker than the reference. I'm not quite sure why this is happening. It could be that there's some hidden intersection testing issues, the weights aren't being updated properly, the eye-light path connection isn't working (seems unlikely though, as the 1st bounce matches perfectly), etc

Renders

|

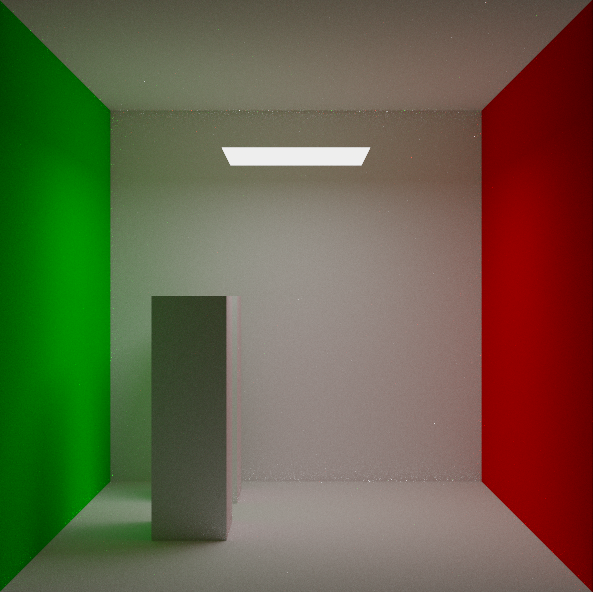

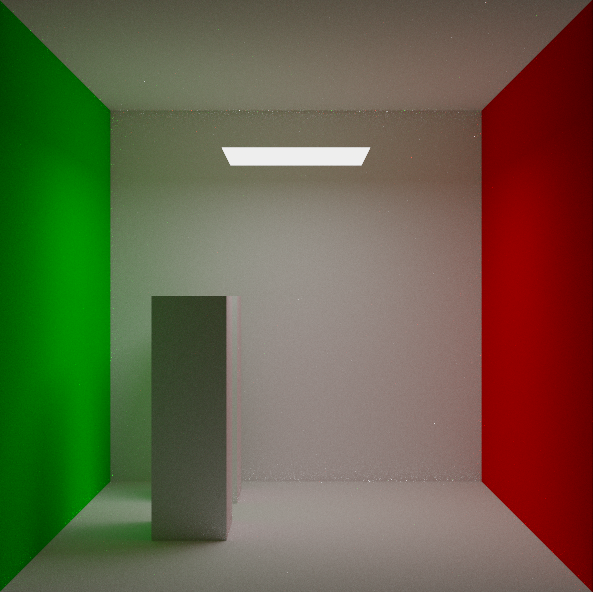

| BDPT - 6 bounces (I think the fireflies are caused by extremely short eye-light connections, as the eye-light transfer has a 1/d^2) |

|

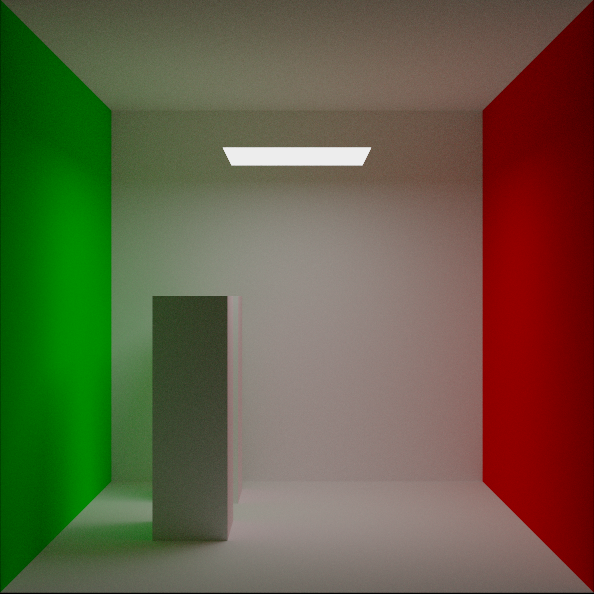

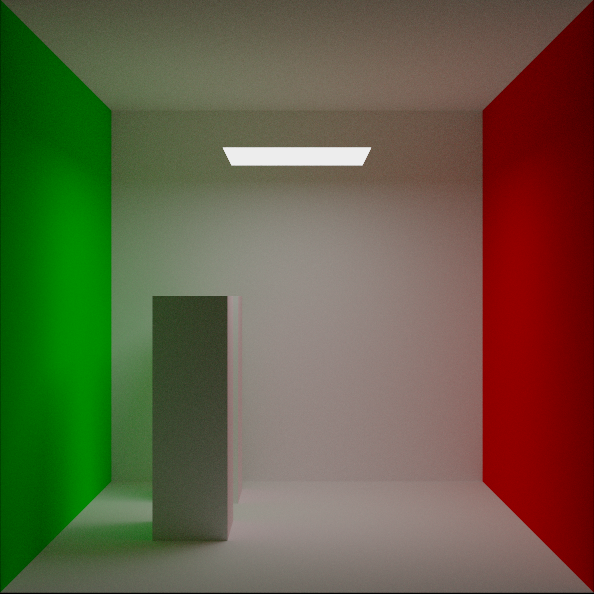

| Unidirectional + MIS Reference - 6 bounces |

|

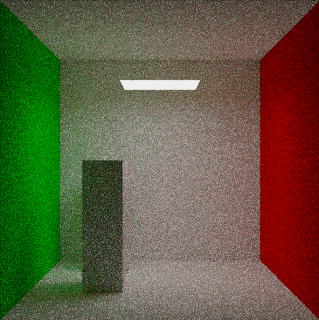

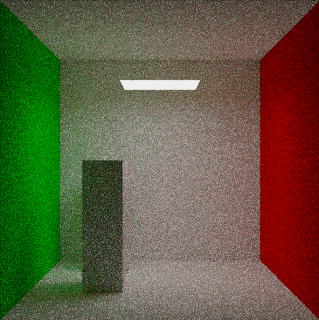

| BDPT - 5 second render - 6 bounces |

|

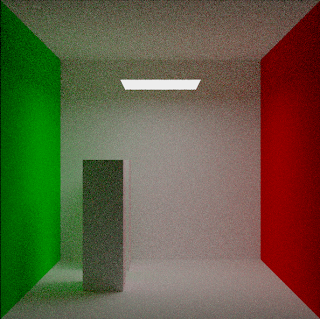

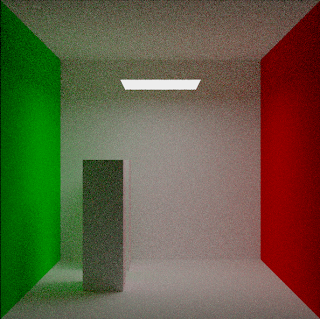

| MIS Unidirectional Ref - 5 second render - 6 bounces |

From the above comparison, BDPT with uniform weighting is actually not that great. I will be implementing MIS BDPT next.

Validation

For validation, I checked each BDPT bounce with reference version. In addition, I set Russian Roulette on BDPT and Ref, and compared the 'infinite' bounce renders. As noted above, while direct and bounce 1 matches, the other bounces do not (slightly).

Note

In a BDPT, because you have two paths (eye and light), the highest partially accounted bounce is actually EP + LP deep. Note that it's "partially". For example, lets say we have 8 eye and 8 light bounces, for a max of 16 bounces. The BDPT will weight the 16 bounce solution assuming you have (15E 1L, 14E 2L, etc...), despite the 8 E 8L cap. So for validation purposes I had to throw out a lot of combinations; otherwise the image has incomplete bounces.

Implementation Notes

Light Eye Path Connection

I used the projected solid angle (cos*cos/d^2) of the light vertex w.r.t. the eye vertex to transfer the weights.

Uniform Weights

I ignored the 'light path hits the camera' and the 'construct shadow ray from camera to light vertex' cases. So, for a length 2 path, the weight is 1/2.

Extra 'eye path accidently hits light' bounce.

I have an extra bounce where I detect if my eye path accidently hits the light, and adds it to the color. Only this case is handled in this extra bounce (ignore any paths involving shadow rays).